What is the Hyperautomation Struggle?

A large portion of enterprise revenue is directly affected by the availability and security of IT infrastructure. This puts IT organizations under extreme pressure to ensure business continuity, accommodate growth, and help increase business value. But they struggle to solve one significant challenge: keeping operations running during economic recessions, natural disasters, or international conflicts, when physical access to sites is limited or outright prohibited. This challenge is exacerbated by an increase in distributed infrastructure, especially edge computing, which Gartner predicts will process 75% of enterprise generated data. Going along with this, Gartner states that the answer lies in complete network automation, also known as hyperautomation. However, network automation comes with a fear-inducing stigma.

Speak with any enterprise IT team and it’s easy to understand why they’re afraid to automate. One mistake, configuration error, or wrong command can bring down the whole production network, or worse, “cut its own legs off” so to speak. This means severing all avenues capable of recovering the network, leaving IT teams to completely rebuild from scratch and becomes a “resume generating event” as one of our working group members calls it.

But there is good news. Over the last decade, hyperscalers and tech giants have implemented and nearly perfected hyperautomation, not only in data centers but across a wide range of edge and branch locations. Having worked directly with these companies, we can now share our solid understanding of the building blocks, components, and automation infrastructure required of at the very least network hyperautomation. This checklist will help any size enterprise begin their network automation journey starting with the right building blocks and best practices.

Building Blocks of Hyperautomation

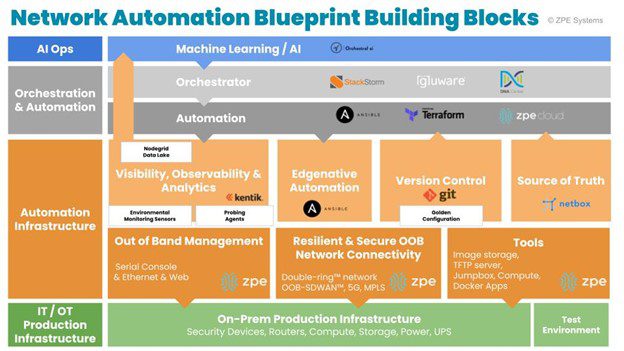

In a previous post we introduced the building blocks for hyperautomation. These building blocks are summarized below. In this post we’ll draw attention to the elements specific to the automation infrastructure and provide examples of best practices to follow.

| AI OPS

|

This building block consists of the infrastructure that enables more efficient management of automation, such as machine learning and artificial intelligence to take automated actions.

|

| Orchestration & Automation

|

This building block consists of the Orchestration and Automation infrastructure that enables management of the target infrastructure. To enable hyperconverged automation, this layer must support IT infrastructure like servers, routers, firewalls, and OT (Operational Technology) infrastructure, which includes a wide range of industrial systems, building management systems, power, sensors, and IoT.

|

| Automation Infrastructure

|

This building block provides the integration and connectivity from the Orchestration and Automation layer to the IT/OT Production layer. This consists of the hardware, which provides an automation control plane network with secure and resilient connections such as 4G/5G out-of-band devices, VPN, IP-based access, and Serial Console connections; and software that enables the automation to occur, which includes file servers, jump boxes, source of truth, and Version Control systems.

|

| IT / OT Production Infrastructure

|

This building block consists of the existing enterprise production infrastructure and operation infrastructure that needs to get automated, such as servers, routers, switches, applications, as well as cloud infrastructure and building management systems, Industrial solutions and IoT

|

Automation Infrastructure Blueprint:

The automation infrastructure is a key foundational element for best practices for hyperautomation and plays a crucial role in eliminating the anxiety IT teams face. Automation anxiety often originates from these facts and scenarios:

- The fact that automation is software, and software is written by humans, meaning it can have bugs or errors in its algorithms which could take down the network.

- The fact that automation relies on the infrastructure to behave exactly as modeled. When a switch is upgraded, it needs to reboot and come back to the upgraded state. But what if it hangs due to having a full disk or firmware bug that the lab units didn’t catch? How do you recover 1,000 production NGFW gateways that failed to upgrade?

- What if the production server’s BGP routes were inserted incorrectly and blackholed the management traffic? How do you reconfigure it?

- What if an edge network loses its WAN link to its golden config? How do you recover it automatically at scale?

- How do you perform regular firmware and security updates for a wide range of vendor solutions, automatically and at scale, to protect against ransomware and outsider attacks?

- Without touching many different tools, how do you holistically update configurations across a wide range of vendors and technologies, automatically and at scale?

To solve these problems, here’s how the building blocks are arranged and how the automation infrastructure breaks down into its essential elements (see graphic)

Automation Infrastructure Layer

The automation infrastructure layer provides resilient connectivity and a robust automation environment, with special elements that include security and a vendor- and device-neutral recovery option. This layer combines hardware and software that form the core of the network automation blueprint, which are broken down into these smaller building blocks:

- Visibility, Observability & Analytics

- Source of truth

- Version Control

- Edgenative Automation

- Out of Band Management

- Resilient & Secure OOB connectivity

- Tools

These sub-building blocks should follow a best practice network automation blueprint. To give an example, let’s draw attention to the out-of-band management building block and also the out-of-band connectivity building block.

The key role of these two blocks is to provide a completely separate network and path for automation that is not impacted by automation. Because automation relies on software algorithms, there are bugs and errors that can be introduced that can take down the network. Even with heavy lab testing and mechanisms like ‘digital twin modeling,’ it’s simple to overlook a bug or full log disk capable of locking up a switch. Such little room for error means there’s anxiety abound, and to remove this anxiety, teams must have automation infrastructure that’s truly separate from production infrastructure. Here are two best practices and their advantages:

- It’s critical to have local connectivity to each element of the production network, including dedicated Ethernet ports and RS-232 serial console ports of switches, routers, and PDUs. Console port connectivity offers two best practice advantages: ensuring that the infrastructure can always be recovered (even from low-level failures requiring console access); providing better security, since only the serial console device can access the infrastructure element, thereby eliminating east-west attacks through IP.

- It’s critical to have truly separate production and automation networks, so one is not affected by an outage or interruption of the other. This is called the out-of-band infrastructure WAN (OOBI-WAN™), or double-ring architecture (see figure below). The OOBI-WAN outer ring can be composed of a dedicated MPLS or fiber connection on a system that’s separate from the production network; or it can be built via manual setup of a VPN or the newly-automated out-of-band SD-WAN (OOBI-SDWAN™).

Security

As you can imagine, automation requires access to all critical components of your network infrastructure. Security must therefore be a top consideration and baked into every building block. For example, the serial console element used in automation infrastructure needs to be secure at the HW layer (encrypted disks), OS layer (secure boot), and software and management layers (two-factor authentication and separation of duties). This prevents attackers from gaining access and moving laterally across your infrastructure.

The Checklist for Automation Infrastructure

Follow this checklist to ensure your automation infrastructure implementation includes all the necessary sub-building blocks:

Visibility, Observability & Analytics

- Ensure that the infrastructure has probes to collect the necessary data points to feed orchestration and AI Ops layers

- For physical locations, ensure deployment of environmental sensors that can be used for predictive analytics, proactive orchestration, and root cause analysis

- Receive high-fidelity event notifications and limit overload of false negatives/low-importance events. These can then be used for closed-loop automation and feed into the AI Ops layer

Source of truth

- Ensure only a single source of truth

- Source of truth must support APIs to integrate with automation solution

Version Control

- Must have a single repository of configurations

- Must have a single repository of golden firmware images

- Must have a single repository of configuration backups

- Must have good integration with and support for the automation solution, to limit operational overhead

Edgenative Automation

- Must support asynchronous automation tasks (locations must be able to perform tasks independent from a main controller) to ensure scalability, resilience, and fast response times to time-sensitive events like power outages

- Automation tools should be able to use all available connections, including serial console connections, to ensure connectivity to devices during firmware updates or restarts

Out-of-Band Management

- Confirm Orchestration layer reaches all device elements of the production infrastructure via out-of-band, through serial console ports, management ports, Web UI, and KVM in legacy systems

- Ensure Gen 3 serial consoles with extensible, edge native automation for resilience against outages; and with software extensibility of automation targets so you can run automation of your choice (Ansible, Gluware, Salt Stack, etc.)

- Ensure security at hardware, software, and management levels (encrypted disk, TPM, secure boot, latest kernel and patches, two-factor authentication)

- Ensure deployability of third-party solutions/agents, including VMs and containers, onto the Gen 3 OOB solution in order to collapse the required stack in each location

Resilient & Secure OOB connectivity

- The deployed OOB solution and connectivity to the IT/OT layer must be physically separated from the production system

- Ensure double-ring network, which keeps out-of-band infrastructure WAN (OOBI-WAN) for automation truly separate from production network at every level

- Ensure resilient OOBI-WAN by choosing solutions with a wide range of connectivity options like 5G radios

- Simplify OOBI-WAN by selecting an automated VPN setup between all nodes through OOB SD-WAN solution

Tools

- Ensure a set of tools at each edge location (or top of rack location) that offers storage and TFTP services, including jumpboxes

- Do not use Raspberry Pi or other unmanaged devices that add failure points and vulnerabilities (unpatched OS)

- Ensure the ability to run Docker and VM images for remote troubleshooting and experience monitoring

- Enable secure power cycling via Gen 3 OOB connected to PDU

Please click on these resources for additional information:

2 – ONUG working group expands its charter

3 – The network automation blueprint – Download Link