Where did the Network Automation Blueprint come from?

If automation isn’t in your list of top three IT priorities, it should be. Many Fortune 500 organizations are recognizing the benefits automation brings to infrastructure availability and security. It keeps downtime at bay, which can cost $500k or more per hour according to Gartner. Automation also seals security gaps before a breach can occur, which IBM’s 2022 Cost of a Data Breach report shows costs an average of $4.54 million to rectify. Automation is so important now that Gartner has coined the term ‘hyperautomation’ to draw C-level attention and make this a top-three funded IT project to close these downtime and security gaps.

There’s just one major problem: there are no automation best practices. This makes automation very risky, especially when performed right on the production infrastructure, and creates anxiety that postpones projects.

ZPE Systems has spent the last eight years solving this problem from the perspective of large enterprises. During that time, ZPE worked with customers that include the biggest names in tech, social media, ecommerce, retail, and other industries, to gather insights into how exactly they achieve not just automated environments, but hyperautomated environments. Best practices were captured in detail and refined with the help of ONUG’s Hyperautomation Working Group. They are now available as a 40-page best practices and reference architecture document that you can download here.

In this post, let’s take a step back to examine why companies need automation, and why the new best practices document is a must-have.

Why do companies need automation?

Twenty years ago, data and applications lived in a centralized data center. Equipment stacks were housed in select locations and could be managed with the right headcount. Maintaining SLAs was simpler not just because infrastructure had a more localized footprint, but because customers also had lower demand for digital services. In simple terms, nobody was collaborating on Google Docs or streaming Netflix back then.

Fast forward to the adoption of the cloud, and there was an explosion of infrastructure. The largest tech and Internet companies were the first to deploy hybrid models, which feature a mix of services that live on-prem and in the cloud. The amount and distribution of their infrastructure grew exponentially, along with demand as customers expected reliable services for remote work, video streaming, online banking, etc.

Two big problems needed to be solved:

- The amount and distribution of infrastructure drastically outpaced IT’s ability to manage it, which put SLAs in jeopardy

- The hybrid model created a wide and porous attack surface, where buggy software and disjointed solutions could easily be exploited

In 2013, one of our first customers was a hyperscaler with massive data centers and other networking sites distributed across the globe. It was abundantly clear that automation was central to their operations, which meant upholding high SLAs (~99.99% uptime) and keeping global user data secure at all times.

Through discussions with this customer and similar companies, we were able to uncover how automation solves these operational problems.

- Automation — especially hyperautomation — significantly increases IT’s capabilities when it comes to managing infrastructure at scale. Network engineers can only be in one place at one time; meanwhile, they’re expected to operate hundreds or thousands of servers, routers, branch gateways, firewalls, and other infrastructure. Automation helps them scale their efforts, so tasks related to configuration management, troubleshooting, and recovering equipment can be performed automatically to uphold stringent SLAs.

- Automation helps close the attack surface. Since networks include solutions from many vendors, they rely on frequent firmware and software updates to address emerging security vulnerabilities. Manually updating thousands of devices just isn’t feasible, but neither is leaving infrastructure unpatched and vulnerable. Automation allows network engineers to push updates across their infrastructure.

Why is the Network Automation Blueprint a must-have?

The problem most companies face is that they don’t have best practices to follow, which leaves their production infrastructure at the mercy of their skill level. They can easily cause catastrophic outages without an efficient way to recover, and this is why so many are reluctant to adopt automation. Just look at this Fortinet CVE, which highlights the need for automated infrastructure patching to roll back to your last good configuration. How do you do that without the anxiety of cutting your own legs off?

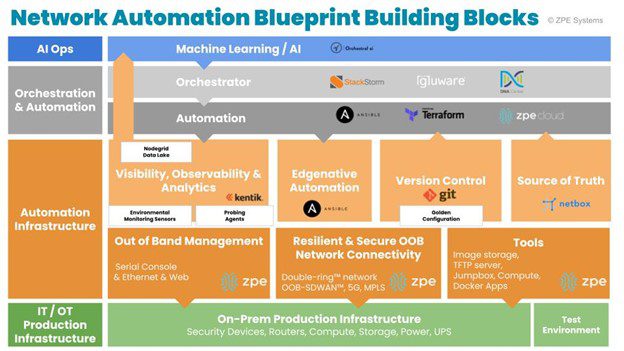

The good news is this blueprint has taken a page (or forty) from the world’s largest tech giants, to create the best practices and reference architecture for implementing hyperautomation. This Network Automation Blueprint details the automation infrastructure layer and its necessary components — such as out-of-band management, version control system, and edge-native automation — that help you automate regardless of your skill level. It also comes with a failsafe, an ‘undo’ button that lets you recover in case of errors or bad configurations.

This blueprint has also been validated by ONUG’s newly-created Hyperautomation Working Group, which includes IT thought leaders from major brands that are implementing hyperautomation. Their consensus: automation is a necessity for the Fortune 500, and this blueprint is the standard for safely deploying and scaling automation initiatives.

To automate with a practical, proven approach, download the Network Automation Blueprint now.