Navigating the Risks of Using LLM-Generated Information in Business-Critical Applications

The potential for Large Language Models (LLMs) to enhance the efficiency of business operations is undeniable. However, the allure of readily available LLM-generated information masks significant risks, especially when used in high-stakes business contexts. This panel session will explore the inherent limitations of LLMs and how inaccurate, conflicting or biased outputs can compromise decision-making or disrupt operations and lead to financial losses, reputational damage or even legal liabilities.

Panelists will describe examples of how using LLMs without appropriate safeguards can result in costly negative outcomes. Not only can LLMs generate inaccurate or biased information, but they are susceptible to prompt injection attacks, training data poisoning and vulnerabilities that can expose sensitive information. Panelists will also address the challenges of validating and auditing LLM-generated information, the lack of transparency in LLM reasoning processes, and the potential for security vulnerabilities.

The session will conclude with actionable strategies for mitigating these risks, including responsible deployment strategies and robust governance frameworks, emphasizing the importance of human oversight and validation mechanisms for AI outputs.

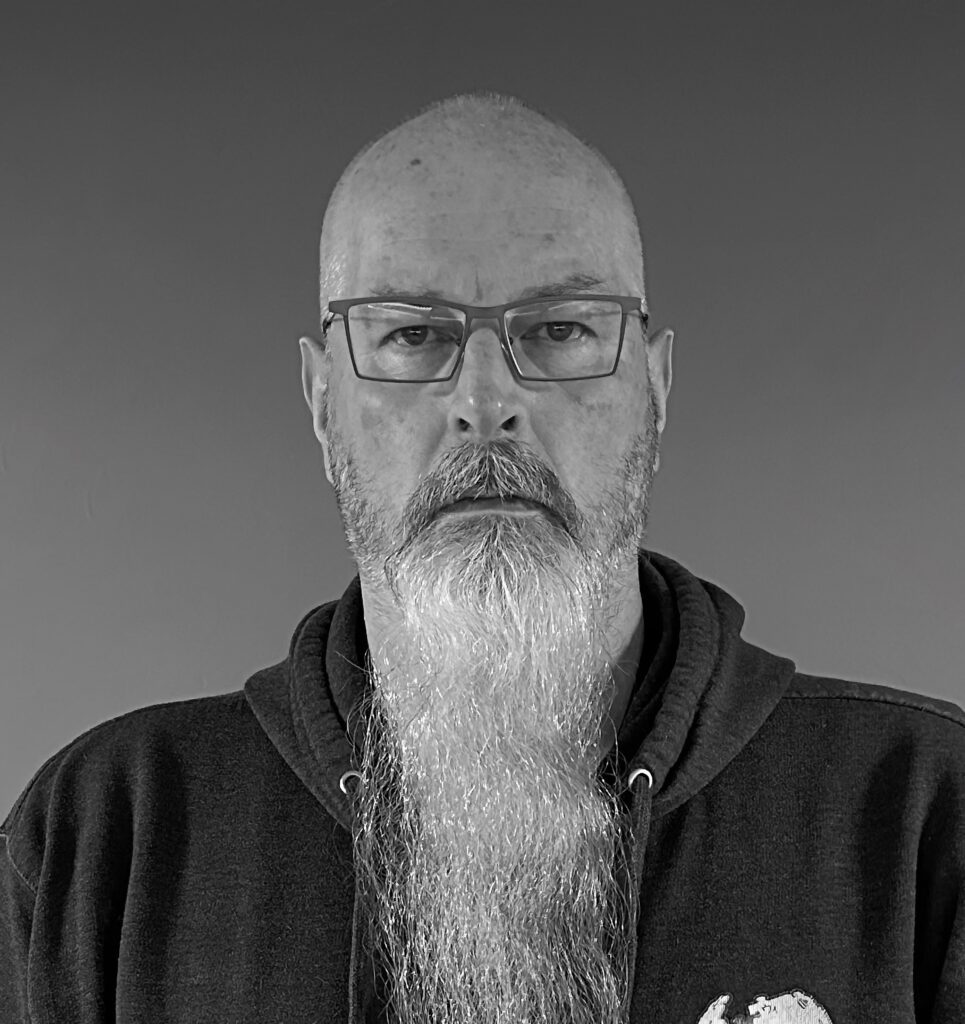

Chris has been in our industry since before its inception (the lack of hair helping to identify this). His most recent projects have been focused within the aerospace, deception, deepfake, identity, cryptography, AI/AdversarialAI, and services sectors. Over the years, he’s founded or worked with numerous organizations specializing in human research, data intelligence, transportation, cryptography, and deception technologies.

These days he’s working on spreading risk, maturity, collaboration, and communication messaging across the industry. (Likely while coding his augmented EEG driven digital clone that’s monitoring his Internet usage, and tea and biscuit consumption!)

When not working he can be found in Eureka, Missouri charging round the countryside on a mountain bike, or hunkered down with the kids experimenting on ways to take over the planet. From an observability perspective he’s large, hairy, often wears a kilt, and can be found on stage with a cuppa tea in hand trying to explain to audiences why they must ask more questions before clicking life’s big red button.

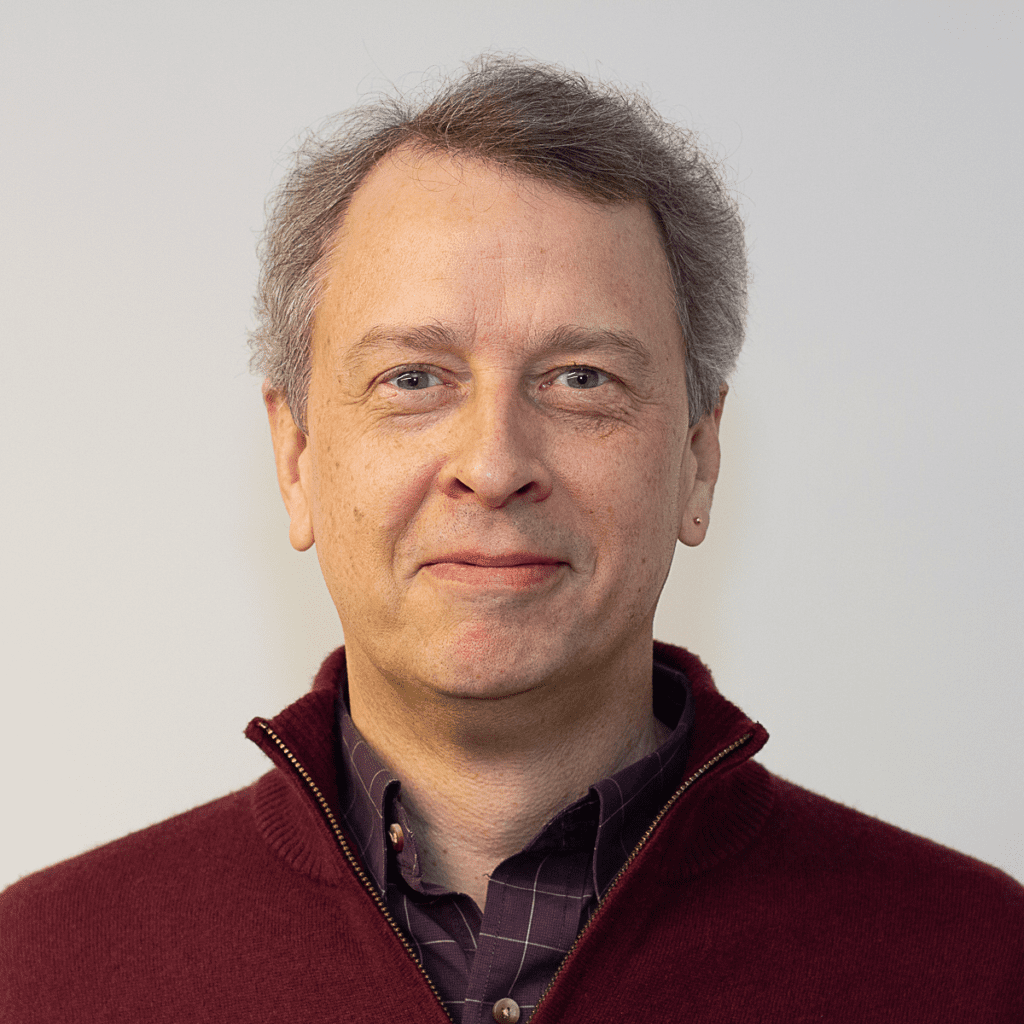

Eric Hanselman is the chief analyst at S&P Global Market Intelligence. He coordinates industry analysis across the broad portfolio of technology, media and telecommunications research disciplines, with an extensive, hands-on understanding of a range of subject areas, including information security, networks and semiconductors and their intersection in areas such as AI, 5G and edge computing. Eric helps S&P Global’s clients navigate these turbulent waters and capitalize on potential outcomes. He is a member of the IEEE, a Certified Information Systems Security Professional (CISSP) and a VMware Certified Professional. He is also a frequent speaker at leading industry conferences and hosts the Next in Tech technology podcast.

Certified Enterprise Cloud Architect focused on AI/ML global regulatory compliance and risk management in the aerospace, commercial, defense, medical, photonic, and software industries. Over twenty years of agile architecture development and evolution of systems that create competitive advantage and disrupt markets.